Are you a Netflix user? How do you think it is able to offer speedy access to its content from anywhere on the planet? For one, it offloads terabytes of streaming data, billions of hours worth, to Amazon Web Services (AWS).

But that is not the complete story. Netflix did not just seek the help of AWS because they have unlimited servers and data centers to provide. In fact, the huge costs of renting actual data centers can make it rather expensive to keep their monthly plans affordable for 150 million end users worldwide.

Containers: The Secret to Netflix’s Success

What Netflix instead does is improve resource allocation (HD videos) from far-flung corners of earth using a new-age technology called “containers.”

These containers are very lightweight machines, usually just a few MB in size and able to start on their own in milliseconds. This they do by sacrificing storage and graphics for the run-time environment. The principle here is to strip the application down to only the code, dependencies, system tools and libraries. Imagine the size of a virtual machine and divide it by 100 or so.

In fact, NetFlix is launching around one million such containers per week, which makes it among the hottest new technologies. For example, this year containers were very popular during the Mobile World Congress in Barcelona.

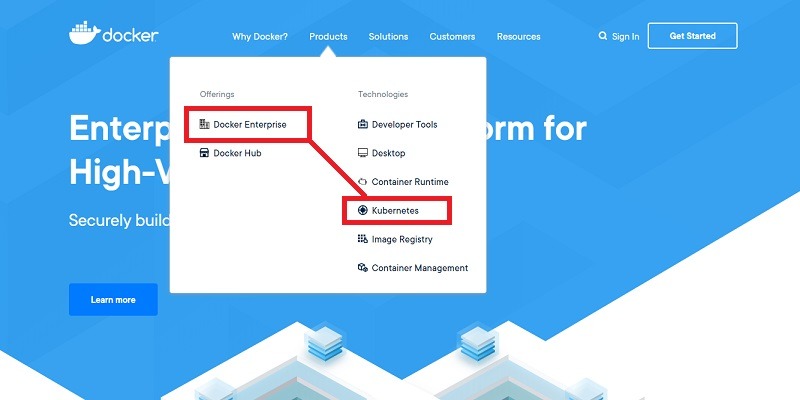

Kubernetes is one example of such a container orchestration which we discussed earlier. But it is not the only one. Here we will explore another similar example called Docker.

What Is Docker?

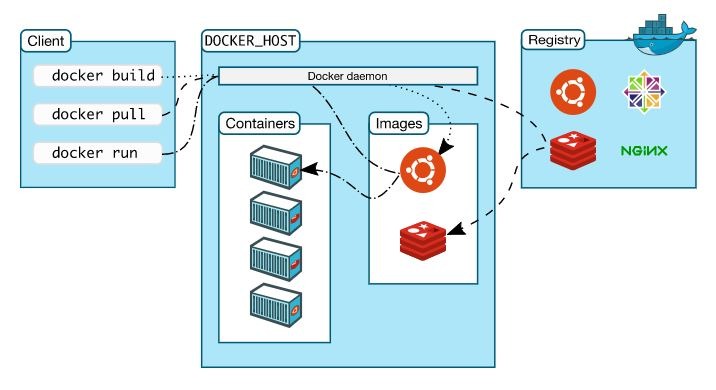

Docker is an independent, open-source container platform that has container images from various software vendors, open-source projects, Kubernetes and the Docker community. The platform is extremely popular with cloud computing and virtualization applications and increasingly with edge IoT networks.

By doing away with the hyper-visor, Docker allows highly flexible workloads, as you can ship code anywhere using container images. Since these images are lightweight, you can run more Docker instances than virtual machines for a given set of hardware. This means more cost savings and less storage.

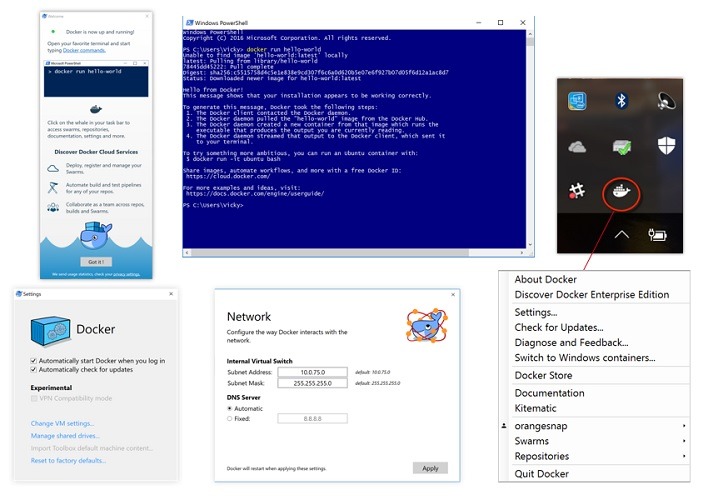

Docker is written in the Go programming language and supports Linux, Mac and Windows installations.

How Docker Is Used in IoT Deployments

Many wide-scale IoT deployments require access to edge devices which are spread out across multiple networks.

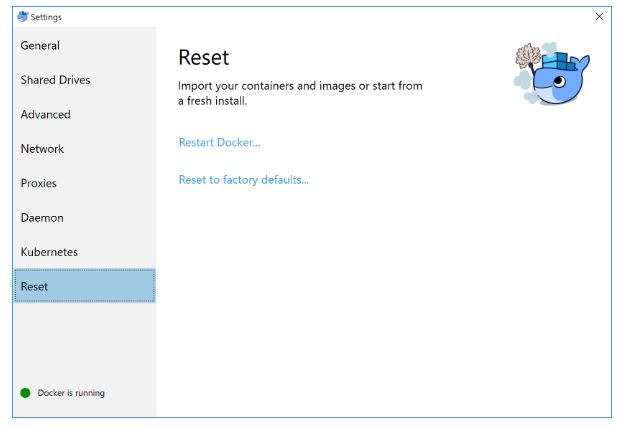

To control the edge apps, first install the Community Edition (CE) of Docker for Windows, Mac or Linux. Follow this by running MiniKube and Kubectl, which are secondary applications with rather complicated instructions to follow.

Confused by above link? Don’t sweat it, because you will never come across Docker as an end user of IoT. It is currently the preserve of IoT system admins alone. Let’s move on to its main benefits.

Advantages of Docker in IoT Deployments

Out of many, there are two main benefits of Docker in IoT deployments:

- Automatic scaling: the administrator does not have to leave their office and can still connect to distant networks easily. All they have to do is connect with the IP addresses of the network devices and send a Docker container image, which will take instructions from the main hub.

- Smaller size of applications: the container images are often in kB, which is ideal for low-power networks. Whether your IoT network uses ZigBee, Z-Wave, SigFox or LoRaWAN, you can transfer data quickly, despite the slow speeds.

In Summary

If the upcoming decade is going to be another “roaring 20s,” courtesy of IoT, then containers and microservices are going to be the instrument behind the revolution. For this reason, this technology can be considered as important as service-oriented architecture (SoA) that revolutionized the Internet during the ’90s.

What are your thoughts about container technologies and Docker/Kubernetes? Let us know in the comments below.