The obvious drawback of autonomous vehicles is we just don’t trust them yet. It’s hard to put total faith in a machine driving 50, 60 miles per hour. There have been questions throughout the development regarding the safety, and it could be a large part why there are no consumer-driver autonomous vehicles on the road yet.

A cybersecurity research firm showed exactly why these self-driving cars can’t be trusted yet. They painted striping on a road and were able to fool a Tesla into entering oncoming traffic.

Hacked Tesla

Keen Labs said in a research paper that it tried two ways of tricking Autopilot’s land recognition by changing the road surface.

One of those ways of confusing the Autopilot was with blurry patches of road in the left lane. Tesla did well with this experiment, as it was easy for the car to recognize what was happening, and it was difficult for the team to actually put this in play in a real-world scenario. Additionally, the cars have already been tested on many “abnormal lanes.”

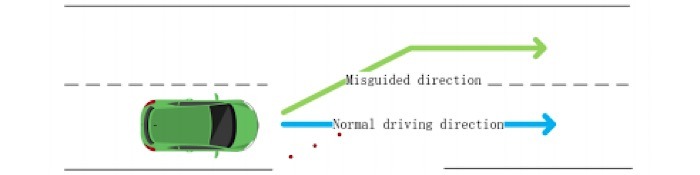

The next experiment was an effort to make the Autopilot feature believe there was a traffic lane when there really wasn’t. The researchers painted three small squares in the traffic lane to look like merge striping.

This time the Autopilot feature was fooled.

“Misleading the Autopilot vehicle to the wrong direction [of traffic] with some patches made by a malicious attacker is sometimes more dangerous than making it fail to recognize it later,” said the researchers.

They added that “if the vehicle knows that the fake lane is pointing to the reserve lane, it should ignore this fake lane, and then it could avoid a traffic accident.”

Tesla didn’t agree that Keen’s findings could be problematic, as they don’t see this as a potential real problem or situation.

“In this demonstration the researchers adjusted the physical environment (e.g. placing tape on the road or altering lane lines) around the vehicle to make the car behave differently when Autopilot is in use,” said the company.

“This is not a real-world concern given that a driver can easily override Autopilot at any time by using the steering wheel or brakes and should be prepared to do so at all times.”

Nonetheless, a Tesla representative reported that the company held Keen’s findings in high regard. “We know it took an extraordinary amount of time, effort, and skill, and we look forward to reviewing future reports from this group, added the rep.

Will There Ever Be Enough Testing?

This does bring up an interesting question. Keen Labs was able to show a vulnerability in the Tesla Autopilot. This was something they hadn’t encountered yet.

But will there ever be enough testing? How could you ever put an autonomous car through every situation? It’s always going to keep changing. And if there is always supposed to be a human driver ready to take over in case of incident, then what’s the purpose?

Add your thoughts to the comments section below regarding how you feel about the Tesla testing in traffic and if you think it will ever be road-ready for every situation.

Image Credit: Keen Labs via Business Insider