For the autonomous future to be a reality, the autonomous vehicle’s (AV) navigation capabilities must exceed a human driver’s. One of the biggest software requirements for driving in an urban environment is to solve the “perception” challenge of on-board sensors. Perception refers to how the AV will respond to its immediate environment, comprising other vehicles, cyclists, pedestrians, objects, and other obstacles. This, in turn, allows the five levels of SAE driving autonomy ranging from Level 1 (adaptive cruise control) to Level 5 (fully driverless locomotion).

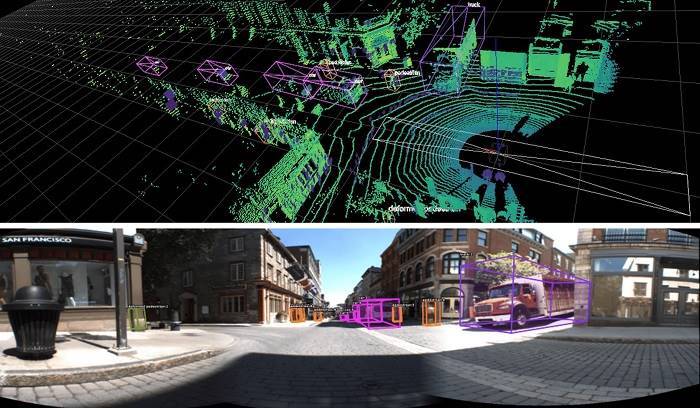

LiDAR technology (Light Detection and Ranging) has emerged as an important perception standard in the autonomous market. Through a contextual understanding of an obstacle’s position, elevation, and relative speed, a dynamic 3D map is plotted which feeds the Advanced Driver Assist Systems (ADAS) and Autonomous Driving (AD) systems. Such an enhanced visibility is critical for safe vehicle operations.

To learn the industry perspective on LiDAR’s effectiveness, we spoke to Québec-based LeddarTech, one of the market leaders in this technology. In an exclusive interview, its Chief Technology Officer (CTO), Mr. Pierre Olivier, shared his thoughts on how LeddarTech’s LiDAR-based perception platform meets basic functional safety standards while trying to overcome LiDAR limitations such as signal degradation in bad weather.

LiDAR vs. Radar vs. Motion Cameras

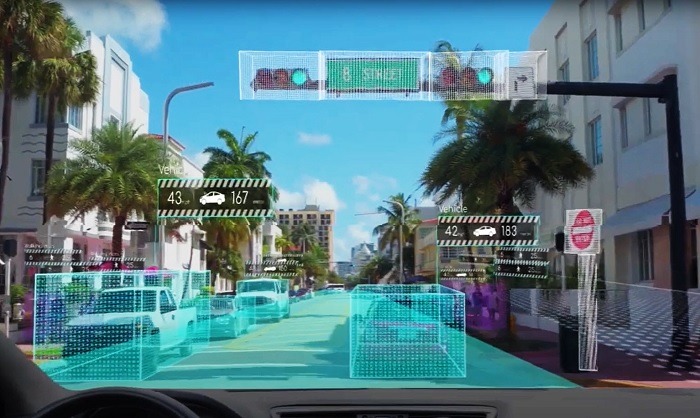

For enhanced AV perception, is LiDAR better than radar or motion cameras? There are no easy answers to this question, as we previously saw in our LiDAR vs. Radar comparison. LeddarTech has a different take on this: despite being a versatile LiDAR solution developer, its LeddarEngine platform is a bit more all-encompassing. The following is a Québec City footage of LeddarTech’s test vehicles equipped with a full suite of sensors (LiDAR, Camera, Radar and IMU). The assembly collects real-world data to enable what is known as “sensor fusion” for ADAS and AD.

Says Pierre: “We don’t see ourselves as only a LiDAR company. [Concerning LiDAR, radar and motion cameras], all these technologies are complementary, and there’s no one sensor modality that can address all requirements. The [main] requirements include long-range, wide field of view and accurate depth measurement. If you take cameras, for example, they’re very good at accurate lateral positioning because they’re offering high-resolution images. But they can’t provide accurate depth measurement – unless you get into stereo cameras [binocular vision, 3D imagery etc.], but these also have their own limitations.“

It’s well-known that a technology like 4D imaging radar is very good in harsh weather conditions but also disadvantages ADAS by providing lower resolution. However, we have seen that some solution vendors are actively addressing that challenge by integrating higher frequency capabilities in radar (61-81 GHz). Still, Pierre mentions that “radar solutions don’t work very well with static objects because there tends to be a lot of clutter, so it has limitations in a moving environment.”

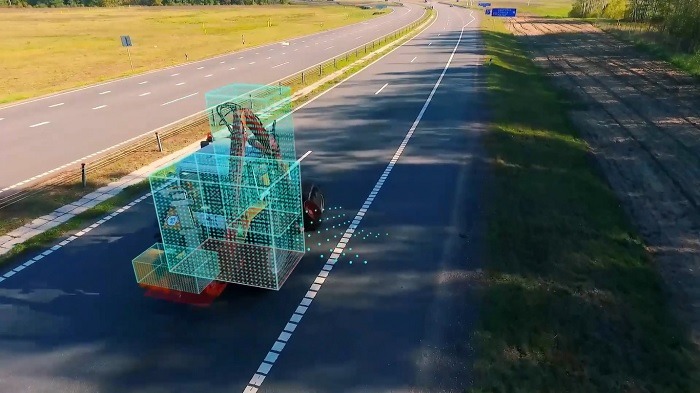

LeddarVision, LeddarTech’s key solution, isn’t entirely about LiDAR, though, but it is a sensor fusion and perception solution, which delivers highly accurate 3D environmental models for all kinds of autonomous vehicles. It offers a full software stack supporting all SAE autonomy levels by applying AI and computer vision algorithms which fuse raw data from radar and camera for L2, and camera, radar, and LiDAR for L3-L5 applications.

For those higher autonomy levels, one requires advanced capabilities, such as unidentified object detection, bird’s eye-view occupancy grids, and clear three-dimensional bounding boxes with accurate position, orientation, and velocity details. Even with a tiny bird at a distance, the perception algorithm shouldn’t miss out.

Sensor raw data fusion using LiDAR, radar, motion cameras, and geolocation technologies sounds like the future of AV perception. While LeddarTech uses LiDAR sensors for higher level SAE autonomy, other perception-makers may have a different approach, where, for example, radar assumes more significance. However, based on Pierre’s explanation, it is clear that instead of comparing LiDAR with radar, the industry consensus right now is that integrated solutions are the only way forward in autonomous perception.

LiDAR Sensors of LeddarTech

The limitations of LiDAR are something that LeddarTech does try to address through its R&D and innovation efforts. According to Pierre, “LiDAR can provide good resolution, but it struggles a bit more during harsh weather.” It is, however, very effective in overcoming the shadows and blinking lights from other vehicles. Pierre adds: “LiDAR is very good in that respect. The shadows, for example, are not an issue because LiDAR only beams off solid hard objects.”

He adds: “Our [LiDAR sensor] technology addresses a number of areas. The first is that each of our measures are built from a high number of light pulses, so it’s not just one or a few light pulses, as is the case with some of the other mechanical LiDAR solutions. Typically, it’s between 10 to 50 light pulses which make one measurement. So, by the very nature of the measurement method, there’s a higher resilience to interference. We are able to distinguish the signal patterns in the digital waveforms and filter them out. Our technology will have a degradation in performance, but it has a more ‘graceful degradation’ than other technologies.“

The LeddarTech LiDAR sensors use some technical capabilities to enable detection of weaker signals. One of the biggest challenges in LiDAR is due to signal interferences. Says Pierre: “From a LiDAR standpoint, what is transmitted out is called point-cloud. Each measurement is stacked with the elevation and distance. Indeed, the main issue with LiDAR is the interference from other LiDAR signals. There is much more capability to filter out any residual interference at a signal processing level.”

LeddarTech’s LiDAR sensors use a multi-pulse measurement method to ensure less signal degradation in inclement weather and changing light conditions, as well as mutual sensor interference. This, Pierre believes, is a lot more effective than point-based measurement methods. Other LiDAR sensor solutions by the company include Leddar d-tec, an above-ground stop-bar detection system, Leddar T16, a LiDAR traffic sensor, and LeddarOne, a single segment LiDAR sensor module.

Solid State LiDAR

Solid state LiDAR technology makes a compelling case for itself and is rapidly becoming the LiDAR technology of choice for AV makers. This has a great advantage from a pricing perspective. What does it really mean?

According to Pierre: “What customers mean when they say solid state LiDAR, it refers to ‘no moving parts,’ whatsoever. If you take a camera, there’s an imager and a lens. With radar, there’s an RF chip and antenna. With LiDAR, there’s a motor and other components which physically rotate. What we’re building is true solid state LiDAR, so the ability to produce at a lower cost is much better. [There are no] mechanical components such as motors. [Also], because you don’t have mechanical components, the assembly is much easier.“

Currently, LeddarTech has seen its 14 generation of solid-state LiDAR technology. One of its applications is in Leddar Pixell, a mobility application which consists of a 100 percent solid-state design, fanless IP67 enclosure, better shock and vibration resistance, and wide temperature range. There is also Leddar PixSet, a publicly available dataset, which was recently launched in February 2021. It integrates data from a full sensor suite and generates full-waveform data from a 3D solid state flash drive sensor, as shown in the figure above.

Concluding Thoughts

With 95 patents pending or approved, LeddarTech is betting big on the autonomous market. According to the personal views of Pierre, “Real high-volume deployment of autonomous vehicles (AV) won’t be there until 2030, but from 2025, it might accelerate more.” He further insists that a level of consolidation is needed in the LiDAR industry.

He says: “What we think is that LiDAR has to take the path as camera and radar. The solutions are to be industrialized in high volume. There can’t be hundreds of vendors that are going to be like clones of one another.”

So is integration of LiDAR solutions a reality? Pierre concludes: “As a company, we’re trying to accelerate that. We’re making our technology available as components. We view ourselves as a technology provider that provides components both for LiDAR and perception to the various customers. We want to serve the complete market.”

(Arc de Triomphe, Paris – Featured Image Credit: LeddarTech Global Marketing & Communications team)